Datasets

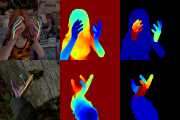

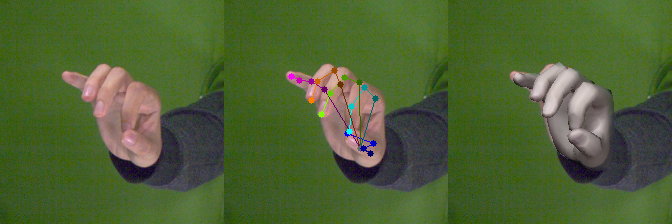

Rendered Handpose Dataset

This dataset has been used to train convolutional networks in our paper

Learning to Estimate 3D Hand Pose from Single RGB Images.

It contains 41258 training and 2728 testing samples. Each sample provides:

| - | RGB image (320x320 pixels) |

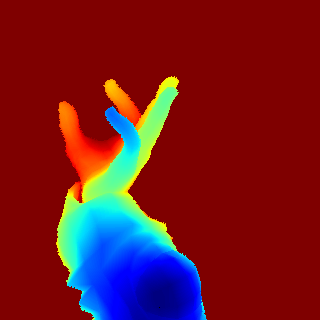

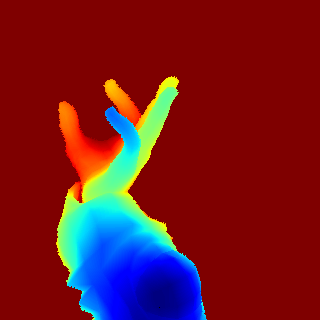

| - | Depth map (320x320 pixels) |

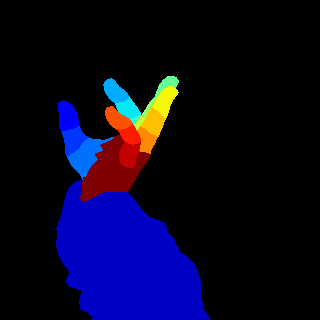

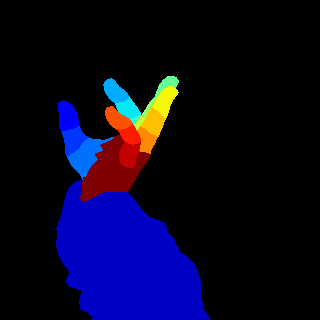

| - | Segmentation masks (320x320 pixels) for the classes: background, person, three classes for each finger and one for each palm |

| - | 21 Keypoints for each hand with their uv coordinates in the image frame, xyz coordinates in the world frame and a visibility indicator

|

| - | Intrinsic Camera Matrix K |

It was created with freely available characters from

www.mixamo.com and rendered with

www.blender.org.

For more details on how the dataset was created please see the mentioned

paper.

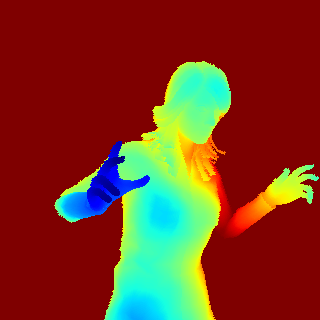

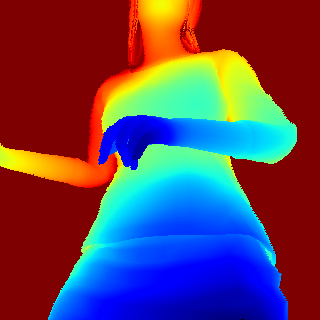

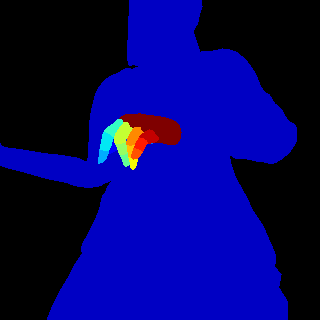

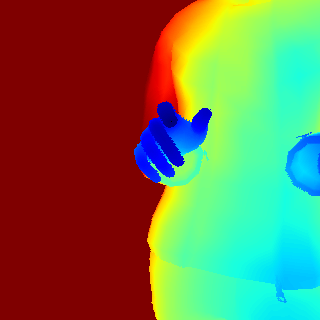

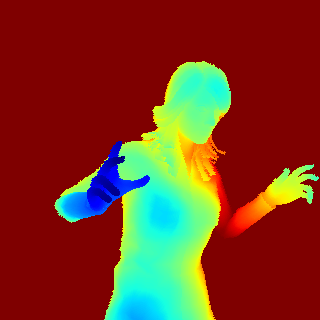

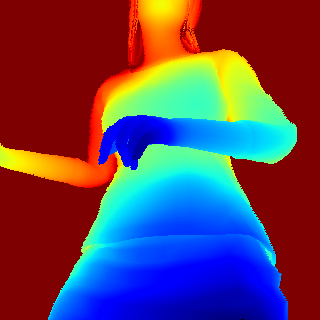

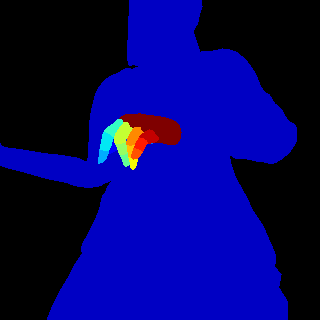

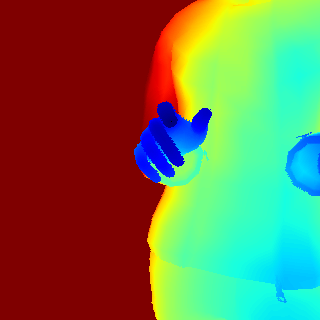

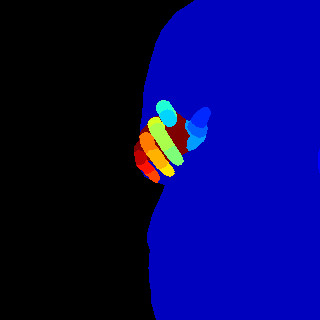

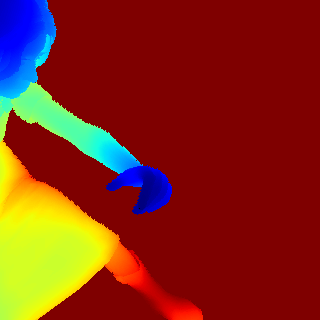

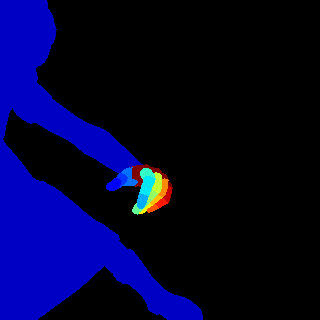

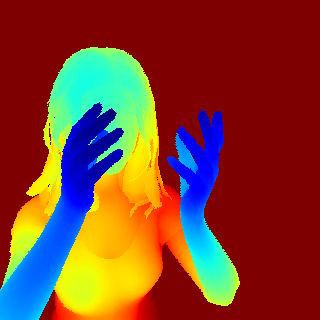

Examples

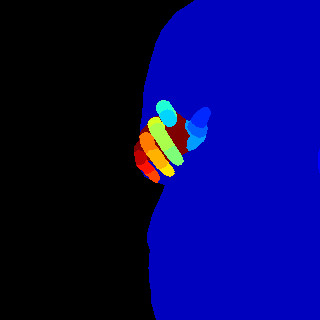

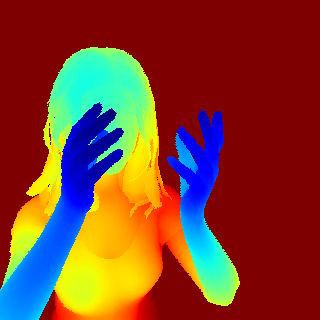

| RGB + Keypoints |

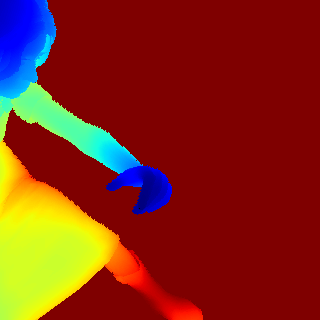

Depth |

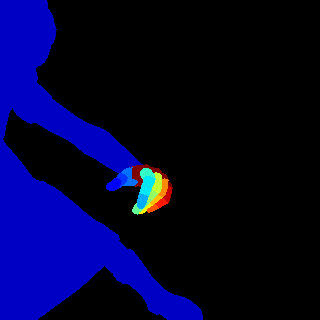

Segmentation |

|

RGB + Keypoints |

Depth |

Segmentation |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Terms of use

This dataset is provided for research purposes only and without any warranty. Any commercial use is prohibited. If you use the dataset or parts of it in your research, you must cite the respective paper.

@TechReport{zb2017hand,

author = {Christian Zimmermann and Thomas Brox},

title = {Learning to Estimate 3D Hand Pose from Single RGB Images},

institution = {arXiv:1705.01389},

year = {2017},

note = "https://arxiv.org/abs/1705.01389",

url = "https://lmb.informatik.uni-freiburg.de/projects/hand3d/"

}

|

Dataset

The dataset ships with minimal examples, that browse the dataset and show samples.

There is one example for Python and one for MATLAB users. See the following

README for more information.

Download Rendered Handpose Dataset (7.1GB)

Contact

For questions about the dataset please contact

Christian Zimmermann.